Many enterprises run PostgreSQL database for functions dealing with the identical costly actuality. When this operational knowledge must be analyzed or fed to AI fashions, ETL (Extract, Remodel, Load) knowledge pipelines are constructed to maneuver it to analytical methods. These pipelines require devoted knowledge engineering groups, break down regularly and create delays measured in hours or days between when knowledge is written to a database and when it turns into accessible for evaluation.

For corporations with numerous PostgreSQL cases, this infrastructure tax is very large. Extra critically, it was not designed for a world the place AI brokers generate and deploy functions at machine velocity, creating new tables, occasions and workflows quicker than any knowledge engineering workforce can sustain.

Databricks is betting that this structure is essentially damaged. The corporate is shopping for Mooncakean early startup that focuses on PostgreSQL bridges and lakehouse codecs, eliminating the necessity for ETL pipelines fully. Monetary phrases of the deal weren’t publicly disclosed. The expertise guarantees to make operational knowledge immediately accessible for analytics and AI workloads, with efficiency enhancements starting from 10x to 100x quicker for frequent knowledge motion operations.

The acquisition, introduced right this moment, comes simply months later Databricks acquired Neona serverless PostgreSQL supplier. However the velocity of this second deal reveals one thing extra pressing. Nikita Shamgunov, who joined Databricks as VP of Engineering after operating Neon, informed Databricks co-founder and chief architect Reynold Xin that Databricks ought to have purchased Mooncake on his literal first day on the firm.

"The primary day, once we closed, Nikita stated, ‘Hey, we will purchase this firm,’" Xin informed VentureBeat in an unique interview. "And I used to be like, ‘Hey, you continue to do not know the place the bogs are.’ Then over time… I received to know extra about what the corporate does. Like, wow, Nikita was 100% right. It is such a no brainer to do it."

The agent’s infrastructure hole

What made Mooncake pressing was the continued acceleration of AI brokers.

"Eighty p.c of Neon’s buyer database has already been created by brokers," Xin stated, describing the adjustments taking place throughout the Neon platform. "And that is really supported by the separation of storage and compute structure that the Neon workforce pioneered."

This creates a basic drawback. Brokers that construct functions are anticipated to work with PostgreSQL, which is a transactional database. Nonetheless, when these functions even must run analytics, the information should exist in columnar codecs optimized for analytical queries. Traditionally, this required constructing and sustaining ETL pipelines. These are costly, fragile methods that break down regularly and require devoted knowledge engineering groups.

"I do not suppose it is truthful for brokers to be a part of the ETL as a part of constructing these subsequent technology functions," Shamgunov informed VentureBeat. "I believe what the brokers count on now’s the power to iterate in a short time, after which the infrastructure ought to give the brokers pretty uniform entry to the information."

Past the cake, the Moonlink’s architectural benefits

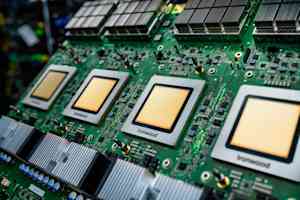

Mooncake has a number of applied sciences in its portfolio. There may be the ‘pgmooncake’ extension which permits analytical workloads to run on PostgreSQL. Then there’s the moonlink aspect that Shamgunov describes as an acceleration stage. It allows real-time transformation between row-oriented PostgreSQL knowledge and columnar analytic codecs with out conventional ETL pipelines.

"Moonlink means that you can mainly create a mirror of your OLTP knowledge in a columnar illustration of Iceberg and Delta," Shamgunov defined. "Moonlink additionally helps a stage of acceleration as nicely. So in lots of locations, you accumulate latency while you question the information lake by metadata question, or s3 each on the way in which in and on the way in which out."

The efficiency implications are dramatic. For operations resembling transferring knowledge from the information lake to what Databricks calls Lakebase (its OLTP database class), Shamgunov stated the enhancements vary from "10 to 100 instances quicker" for "instances nearly infinitely quicker" for cumbersome parallel operations, resembling knowledge format transformations.

For Xin, it is all about increasing the dimensions of the pipeline.

"Think about, prior to now, the OLTP database all the time had just one small pipe, the pipe may very well be a JDBC driver and it is extremely slender. It’s quick, however very low throughput," Xin defined as an analogy. "With Mooncake and different issues we’re growing, we will now create an infinite variety of pipes, and people pipes are a lot larger than a single-threaded JDBC factor."

How Databricks stacks as much as different PostgreSQL suppliers

The acquisition of Mooncake positions Databricks instantly in opposition to the PostgreSQL choices of managed cloud suppliers, notably Google’s. AlloyDB and The Aurora Amazon.

All three methods provide separation of storage and compute, however Databricks executives argue their structure offers basic benefits. Shamgunov emphasised that Databricks combines analytics and operational modeling, with each managed and deeply built-in ends, resulting in quicker knowledge motion and decrease latency.

The aggressive dynamic is nuanced. Databricks competes with all three main cloud suppliers whereas additionally partnering with them.

"We additionally work intently with Google to acquire knowledge from frequent Lakehouse prospects who do that," Xin famous. "So it isn’t only a aggressive state of affairs. I imply, we compete with all of the CSPs on the identical time."

Below Databricks possession, Databricks is now additionally competing aggressively on value. Earlier than the acquisition, Neon’s least expensive paid month-to-month service was $25, which has now dropped dramatically, all the way down to solely $5.

"We did the other of what perhaps many individuals thought we might do, which is after the acquisition, we lowered the value," Xin stated.

What does this imply for enterprises

For organizations managing hundreds of operational PostgreSQL databases in addition to knowledge lakes, the rapid impression is obvious. Growth groups will not want to attend for knowledge engineering to construct and preserve pipelines earlier than accessing operational knowledge for analytics or AI workloads.

For knowledge platform groups, this implies rethinking infrastructure administration from the bottom up.

As an alternative of sustaining complicated pipeline orchestration between operational and analytical methods, groups can give attention to governance, entry management and workload optimization by a unified platform. This modification frees up engineering sources whereas lowering the floor space for knowledge high quality points and pipeline failures. For enterprises constructing agent functions, the unified structure removes reliance on knowledge engineering as a prerequisite for launching new workloads.

“I believe what the brokers count on now’s the power to iterate in a short time, after which the infrastructure ought to give the brokers pretty uniform entry to knowledge,” Shamgunov stated.